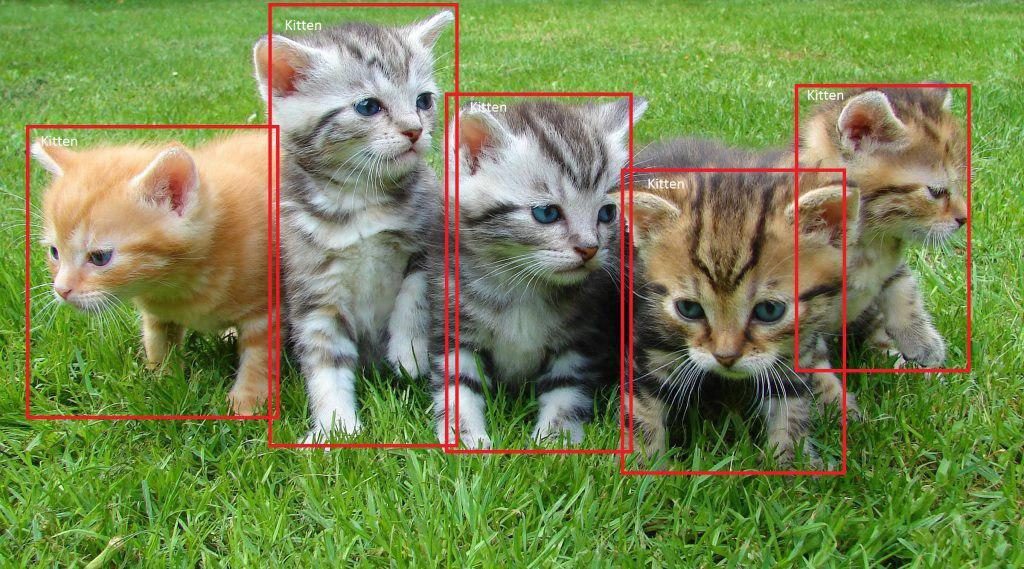

Finding an object in the image or video stream could be quite helpful in countless use cases. Starting from toy examples finding socks in a room and including even life saver apps for detecting early stages of cancer.

A decade ago this task was affordable only for big corporations using huge computing power. We live in a very happy time when everybody, who just know a bit of python, could create a model detection software and use it for own purposes.

Let’s break all in few simple tasks:

- Select computing power we are going to use.

- Select framework.

- Prepare data.

- Train our model.

- Inference images.

how training is happening

In simple words, training a model is a countless multiplication of matrices. We could use our home laptop but it could take awhile for our model to be trained. In comparison, if we use computer with GPU available for computation this could rapid up our journey. For this particular case we get AWS spot instance with GPU available and CUDA preinstalled (I use Deep Learning AMI (Ubuntu) Version 23.1).

Also we select spot p2.xlarge instance for some time with GPU available.

I have chosen the framework Tensorflow Object detection API

Once you follow the tutorial provided and install all necessary dependencies and models we are ready to begin to prepare our data. For this we will use LableImg . It is available for all major platforms Mac, Windows or Linux. The process is relatively straight forward (for further info please refer to video). It also worth mentioning that data preparation should be done along with Tensorflow API. When objects on your images are labelled, separate it by train and test directories and create object files.

Now steps to be taken are:

- Train model.

- Evaluate model. When done we could launch Tensorboard to see how good our model is trained and how it finds objects on test images.

- Export model to some location.

use our model in production

Now we are ready to use our model in production. For that we could select several options for inference such as:

- AWS Lambda function. This is good if inference will be happening relatively rare and there is no need in full time running server.

- AWS Ec2 CPU instance with GPU accelerator. Relatively cheap option when regular CPU instance uses some GPU when needed. Could be a bit cumbersome in settings.

- AWS Ec2 GPU instance. In case you’ve got large volumes of data to be processed or you’ve got video to process in real time then this is the way to go.

If you need any AI or Computer Vision services, please contact for free quote